Many data centers use containment for maximum efficiency.

This is a second post in a series on data center efficiency and how to achieve efficiencies in the data center, which could be anything from an on-premise IT room, colocation space, or a web-scale building.

Containment, as we said before, is the best bang for your buck in making your data center more efficient. By tightly controlling and limiting the spaces where cool and warm air flow, and preventing areas where they can mix, your data center cooling system will need to work less to accomplish its job.

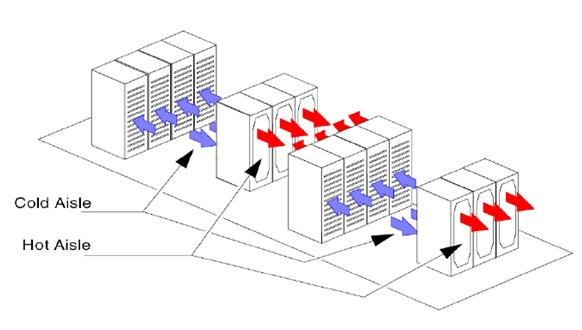

There are three areas to look at when creating a containment system: Cold Aisle, Hot Aisle, and isolating the two from each other (“blanking”). Many efficient data centers physically arrange their server racks into hot and cold aisles with rows of racks showing their server front/intake sides towards the supply air of the cold aisle. If you walk perpendicular to rows of server racks they should alternate in the direction they face.

Above: An illustration of datacenter cabinets arranged in a hot-aisle/cold-aisle configuration.

Above: An illustration of datacenter cabinets arranged in a hot-aisle/cold-aisle configuration.

Cold aisles

Cold aisles are where the supply air from the data center cooling system is pushed using fans, ducts, or other methods of positive pressure to provide a continuous stream of proper temperature and humidity to the servers. The most common way for data centers to supply air is via raised floors. In that scenario the under-floor plenum is itself a contained space, but once it is pushed above via perforated floor tiles and into the cold aisle it should ideally also be contained to increase efficiency.

If a data center doesn’t have a raised floor, it most likely supplies air to the cold aisles via overhead ductwork. Cold aisle containment seeks to minimize and contain the cold air within the spaces immediately in front of the servers themselves. This can take many forms, such as vinyl curtains, similar to what you might see in a grocery store refrigerated section; or lightweight walls and doors made of translucent materials such as polycarbonate. Oftentimes cold aisle containment can be as simple as the supply ductwork itself, creating a “roof” over the aisle. The goal is to create and maintain a minimal space for the supply air to be delivered to the server racks.

Above: Photo of a fully contained hot aisle in a datacenter.

Above: Photo of a fully contained hot aisle in a datacenter.

Hot aisles

The hot aisle is the backside of your server racks. It is where the exhaust from the servers is expelled. The interior components of the servers generate heat. The integrated circuits, especially the CPUs and GPUs, create the most heat. Power supplies, hard drives, and solid state memory circuits also create heat. Heat is a byproduct of the electrical energy being transformed into the bits that is the servers’ output. Once that heat leaves your servers it has to be transported away. The desired destinations are either the input side of the air conditioning system, or outside of the building, depending on how your data center facility handles hot air. Ideally that path should be simple and unobstructed. Most data centers take advantage of heat’s natural tendency to rise by capturing the heat in an enclosed space below the room ceiling and pulling it away using negative pressure from fans. Containing the heat within walls and chimney-like spaces keeps the hot air flowing up and out of the hot aisles.

Above: Blanking panels inserted where servers have been removed. This prevents the mixing of hot exhaust air from the server into the cold supply air.

Above: Blanking panels inserted where servers have been removed. This prevents the mixing of hot exhaust air from the server into the cold supply air.

Blanking

The most important aspect of containment is eliminating any areas where cold/supply and hot/exhaust air can mix. The simple path to success is blanking; filling in any open areas in the rack where air can flow. The goal is to force the flow of air to only pass THROUGH the servers themselves, controlled by their internal fans. All other routes for air travel should be blocked off. Blanking panels in empty rack slots, and filling in any gaps around the racks themselves will effectively seal off any areas where air can mix. When your data center has a very clean, contained, and direct flow of air from supply through your IT equipment, and out to your exhaust without any mixing of air temps, your cooling system can work a whole lot less for optimum result.

Looking for more ways to save money on data center services? Here is a case study where we saved Amdocs 50% on data center costs.